Microsoft has unveiled its first custom silicon, launching two chips, the Maia 100 (M100) AI accelerator and the Cobolt 100 CPU, designed to handle artificial intelligence and general purpose workloads on its Azure cloud platform.

The two chips represent a first foray into semiconductors for Microsoft and see the company follow in the footsteps of its public cloud rivals, Amazon’s AWS and Google Cloud, which run their own chips in their data centres alongside those provided by vendors such as Nvidia.

Maia 100 AI accelerator and Cobolt 100 CPU unveiled

Both the new chips will be available early next year, and are designed on Arm architecture, which is increasingly being deployed in cloud datacentres as an alternative to semiconductors built using Intel’s x86 process, the long-time market leader. Microsoft already offers some Arm-based CPUs on Azure, having struck a partnership with Ampere Computing last year, but claims Cobolt will deliver a 40% performance increase compared to Ampere’s chips.

The Maia 100 will apparently “power some of the largest internal AI workloads running on Microsoft Azure”, such as the Microsoft Copilot AI assistant and the Azure OpenAI Service, which allows MSFT’s cloud customers to access services from AI lab OpenAI, the creator of ChatGPT.

Microsoft is “building the infrastructure to support AI innovation, and we are reimagining every aspect of our data centres to meet the needs of our customers”, said Scott Guthrie, executive vice-president of the company’s cloud and AI group. “At the scale we operate, it’s important for us to optimise and integrate every layer of the infrastructure stack to maximize performance, diversify our supply chain and give customers infrastructure choice.”

Customers will be able to choose from a wider range of chips from other vendors, too, with Microsoft introducing virtual machines featuring Nvidia’s H100 Tensor Core GPUs, the most powerful AI chip currently on the market. It also plans to add the vendor’s H200 Tensor Core GPU, launched this week, to its fleet next year to “support larger model inferencing with no reduction in latency”.

It is also adding accelerated virtual machines using AMD’s top-of-the-range MI300X design to Azure.

Microsoft was an early adopter of AI tools through its partnership with OpenAI, in which it invested billions of dollars earlier this year. OpenAI CEO Sam Altman is enthusiastic about the M100’s potential, and said: “We were excited when Microsoft first shared their designs for the Maia chip, and we’ve worked together to refine and test it with our models.”

Content from our partners

Collaboration along the entire F&B supply chain can optimise and enhance business

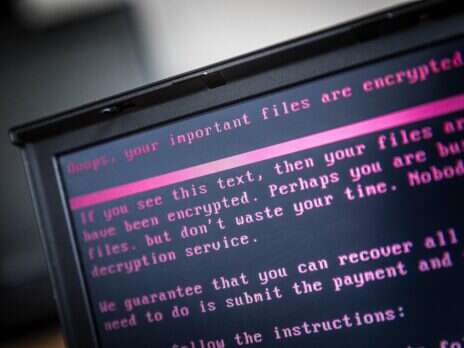

Inside ransomware’s hidden costs

The future of data-driven enterprises is transforming data centre requirements

Altman added that he believes Azure’s AI architecture “paves the way for training more capable models and making those models cheaper for our customers.”

View all newsletters Sign up to our newsletters Data, insights and analysis delivered to you By The Tech Monitor team

Will Microsoft’s new chips give it an AI edge?

Microsoft is the last of the public cloud market’s big three to launch its own processors. Amazon offers its own range of Arm-based Graviton processors as an option to AWS customers, while Google uses in-house tensor processing units, or TPUs, for its AI systems.

James Sanders, principal analyst for cloud and infrastructure at CCS Insight, said: “Microsoft notes that Cobalt delivers up to 40% performance improvement over current generations of Azure Arm chips. Rather than depend on external vendors to deliver the part Microsoft needs, building this in-house and manufacturing it at a partner fab provides Microsoft greater flexibility to gain the compute power it needs.”

Sanders argues that the benefits of developing the Maia 100 are clear. He said: “With Microsoft’s investment in OpenAI, and the burgeoning popularity of OpenAI products such as ChatGPT as well as Microsoft’s Copilot functionality in Office, GitHub, Bing, and others, the creation of a custom accelerator was inevitable. At the scale which Microsoft is operating, bringing this computing capacity online while delivering the unit economics to make this direction viably profitable, requires a custom accelerator.”

Read more: Google Cloud launches Vertex AI data residency regions